iKun AI CS 175: Project in AI (in Minecraft)

Project Summary

Our project makes use of Deep Q-Learning with Neural Network to let the agent find the shortest path between the source/starting block (unknown to the agent initially) and the destination block (unknown to the agent initially) in a pre-build 2D maze. The agent will be led to play in a set of pre-built Minecraft 2D mazes. The agent is able to see the voxels in front of its sight, which is similar to what a normal player can see in Minecraft (however, we might also change this to be a small range of voxels around the agent). The agent is given the full map of a maze, but it does not know source/starting block (the agent needs to locate itself on the map first) and the destination block (the agent needs to find its way out of the maze). The agent’s goal is trying to locate itself first and then get out of the maze as fast as possible using the limited resources we have given to the agent. The final output (for each maze/test) is the starting block of the agent and a set of positions of blocks ordered by the path sequence found by the agent (if optimal, should be the shortest path between agent’s starting block and the destination block).

In the previous version, we found that the training time was pretty long. Particularly, in many conditions, the agent would take a long time to take his actions i.e. turning around or moving forward while trying to evaluate his positions. In the Active Neural Localization project, once the agent hits the wall, he will return to his previous position. However, when the Minecraft agent simulates the actions from the Active Neural Localization project, he will directly fall into the lava which is considered as the walls in our minecraft project. The respwan will interrupt our simulation because the agent will start from the begining position. On the one hand, in the previous version, when we send the randomly generated map to the algorithm model, we have to wait for the training algorithm in the backend to finish analyzing all agent actions (by fitting a specific maze to the model) and then the agent in the frontend will simulate each action on the Minecraft map. However, in the improved version, our agent in the frontend can synchronously simulate the action at any particular moment once the algorithm in the backend generates an action. Synchronous simulation can better help us understand how the algorithm works and indicate directions to improve the algorithm or belief propagation equation.

Approach

In the project, we applied Bayesian filters to estimate the agent’s location dependingon its observations and the actions. For the agent, at each time step it has four known parameters: the map information

, the observation

, taken action

and estimated position

.

In our algorithm, at every time step, the agent will update its estimated position by

.

Belief function is

, Specifically, at each time step

, the agent will guess its potential position according to the Belief function result. Then agent will combine

with its observations

to improve its current position accuracy. The formula

is used to update belief position.

is used to identify where’s agent only depending on its current observation.

will give probability distribuition of every position in the map. The agent will take action

given by the policy function

. The belief poistion at time

is updated according to the action

and the

.

After taking several actions, the agent will give an estimated position with the maximun probability.

The pre-trained model we used is based on the model in the Active Neural Localization project developed by Carnegie Mellon University Ph.D. student Devendra Chaplot. The base model were trained with Asynchronous Advantage Actor Critic (A3C) learning algorithm using Stochastic Gradient Descent with a learning rate of 0.001. The weight for entropy regularization was 0.01. The discount factor for reinforcement learning was chosen to be 0.99. There were two sets of configuration of the model for 2D experiments and 3D experiments, respectively. Since we currently run our agent in all instances of 2D mazes, the pre-trained model under 2D experiments will be utilized. This model was trained with the use of multithreading (using torch.multiprocessing). The final data collected was the pre-trained model that has the best performance (accuracy) among all threads. Multiple training processes ran simultaneously when both training and testing. The pre-trained models are available for mazes with the size of 7, 15 and 21, with various lengths (agent’s action number limit) of 15, 20, 30, 40, 60. Our demo uses size of 21 with length of 60 in this case to allow the agent perform the most actions with high accuracy.

Compared with Aiku v1.0 which we had shown in our status report, Aiku v2.0 is mainly focused on optimizing the overall performance of our agent. In fact, the agent can be up to about 11.5 times faster than the previous generation of Aiku. Here are some ways that we have used to achieve such huge performance improvement. First, we completely redesigned the way our agent interacts with our Neural Network Machine Learning Algorithm, which is what we named as “the bridge.” By allowing our agent to show what the algorithm has done in real time, our agent now no longer needs to be put in sleep during each running session of the algorithm. Second, just like what we have mentioned in the first part, we made our agent go into sleep as little as possible. With such implementation, our agent will now be able to take less rest but do more job. In general, the improvement we made basically means we have defeated an old saying: “You can’t expect the horse to run fast when you don’t let it graze.” Finally, we have now made use of multi-processing in our agent, moving the time-consuming jobs into seperate processes so that our agent will not get into such “traffic jam” anymore. Of course we cannot just simply use a seperate process since our agent needs to be fed with data for exploring the maze, so we did apply some tricks to achieve this.

Evaluation

A very crucial aspect of how our agent completes its mission is how it determines which route to take, which heavily depends on the rewards system. At each time step, the agent will make a belief guess based on its orientation and position on the maze and select the largest belief value from the set of belief guesses. The belief made at the moment of selection will the reward for the current time step.

For evaluation, we’ve run the agent with the A3C algorithm within a controlled set of mazes (with specified maze sizes and action limits). Specifically, the maze size is set as 21 (a 21x21 square maze), and the action limit is set as 60 (the max number of actions that the agent is allowed to play during a test is 60).

In order to evaluate the overall performance of our agent, we have collected the following categories of statistical data during the 10 tests: the number of actions the agent has taken during training (60 actions for each test), the time taken for fitting the maze map to the pre-trained model (during each test), the time taken for the agent to determine the shortest path from start to end after the agent determines its current position (using the pre-trained model, during each test) and the success/failure rate of the 10 tests.

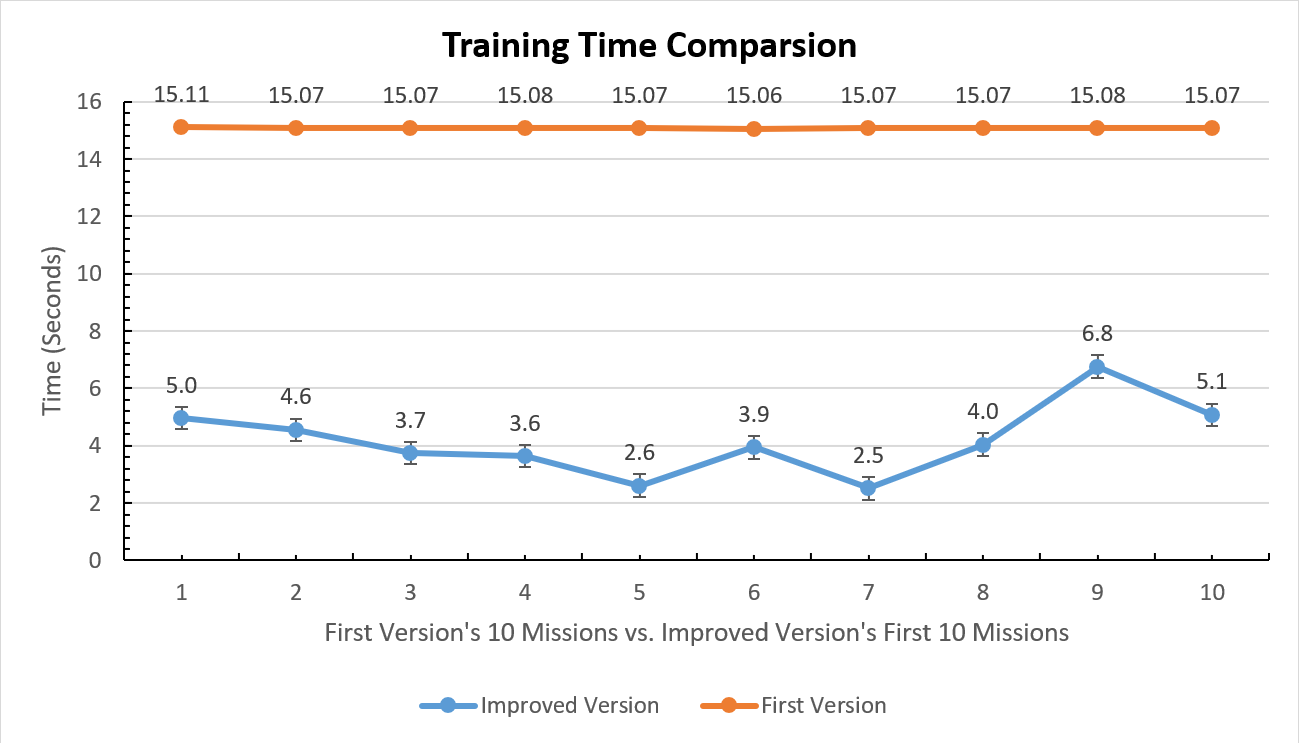

Based on the training time comparison graph, in our improved version of iKun AI, the aveage time taken for fitting the maze map to the pre-trained model among 50 tests is 3.21 seconds. The standard deviation is 0.48 seconds. In our first version of iKun AI, the aveage time taken for fitting the maze map to the pre-trained model among 10 tests was 15.08 seconds. The standard deviation was 0.01 seconds. In comparison, our improved agent has significantly faster (5x) training speed.

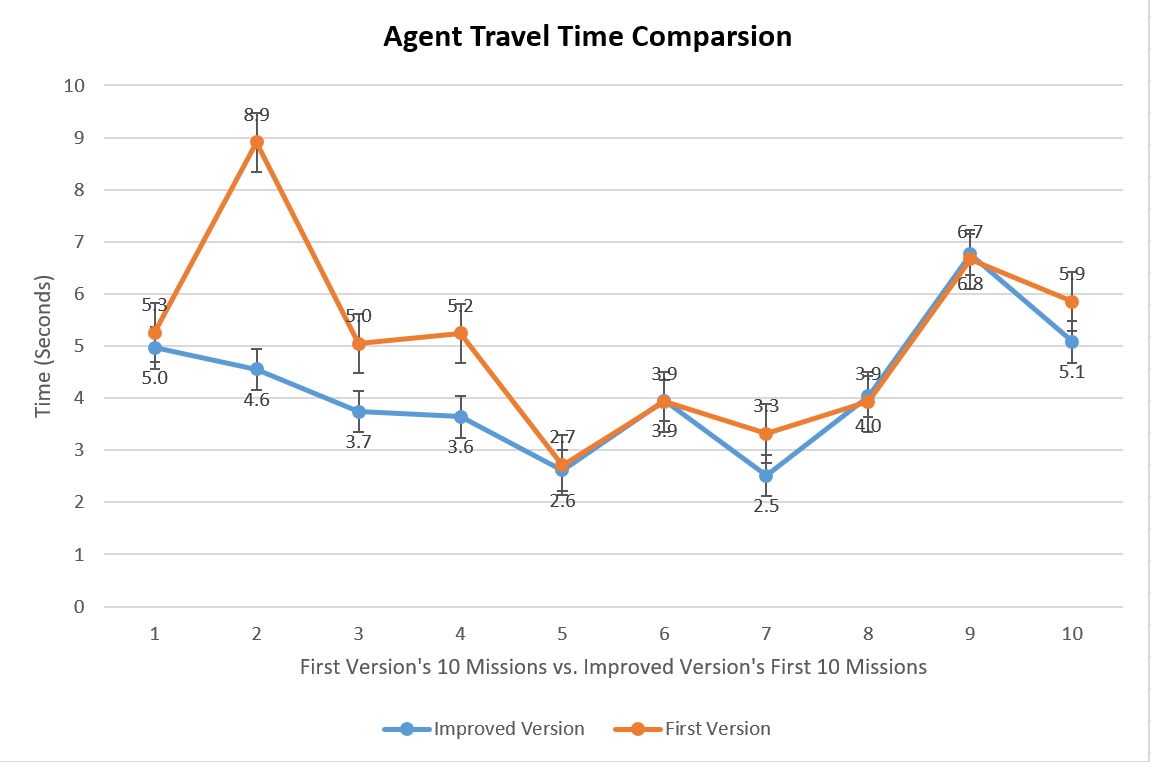

Based on the agent travel time comparison graph, in our improved version of iKun AI, the aveage time taken for the agent to determine the shortest path from start to end after the agent determines its current position among 50 tests is 4.6 seconds. The standard deviation is 1.49 seconds. In our first version of iKun AI, the aveage time taken for the agent to determine the shortest path from start to end after the agent determines its current position among 10 tests was 5.09 seconds. The standard deviation was 1.63 seconds. In comparison, our improved agent has a travel speed that is about half of a second faster than that of the first version agent.

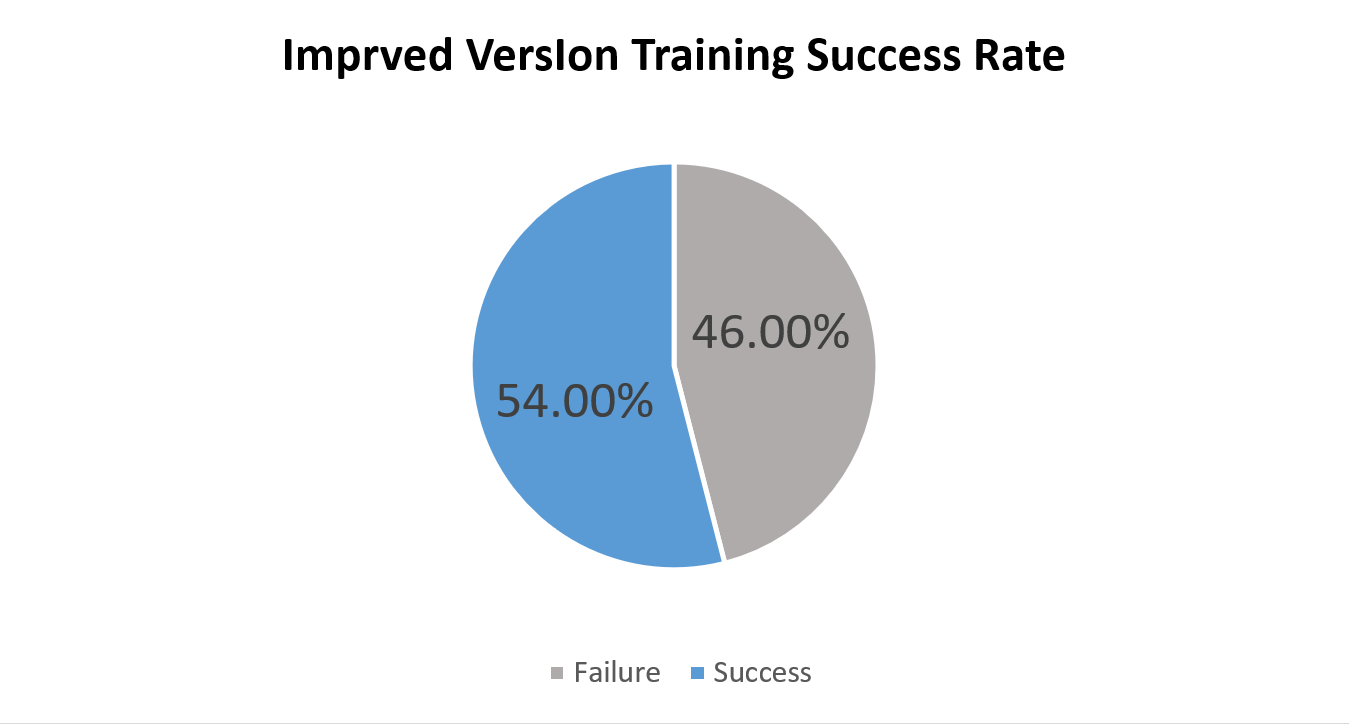

According to the success/failure graph above, our agent current has an approximate success rate of 54% given a random maze with a specified maze sizes and action limits. In our first version of iKun AI, our agent had an approximate success rate of 30% given a random maze with a specified maze sizes and action limits. In comparison, our improved agent has 24% better success rate than that of the first version agent.

In addition, we have tried to verify the feasibility of training a A3C model with map size of 99 by 99 unit blocks and have obtained a partially successful result to a certain degree, due to the very limited computing power that we have. Even though the numerical increase from 21x21 to 99x99 is about 4.5 times, the actual computing power needed for the larger scale training increases exponentially.

Resources Used

Asynchronous Methods for Deep Reinforcement Learning

PyTorch implementation of the ICLR-18 paper

PyTorch implementation of Asynchronous Advantage Actor Critic (A3C)